Instruction Paradigm - An alternative to crowd sourcing

The prevailing way of creating a dataset is using the method of crowdsourcing which is expensive and poses the risk of containing artifacts.

The advent of the SOTA language models like GPT-3 and T0_pp which supports sentence generation via prompting has given us an interesting hypothesis - “Can the instruction paradigm help in replacing humans with machines for dataset creation?”, and in this project, we are trying to verify this hypothesis. Answering this hypothesis helps us in creating an NLP pipeline where

i. Bias by human intuition is eliminated.

ii. Labor costs are reduced.

iii. Dataset cleaning, aggregation, and maintenance can be built into the pipeline.

The project was structured as

- Dataset consideration, I considered MLNI (Multi Natural Langauge Inference), COLA (Corpus of Linguistic Acceptability), and QQP (Quora Question Pairs) datasets from GLUE (General Language Understanding Evaluation) benchmark for this task.

- Designing an NLP pipeline using SentenceBert, and using this pipeline on the original dataset to have a baseline.

- Using prompt engineering methods such as prompt mining (looking at datasets), prompt paraphrasing, and prompt scoring on the GPT-3 and T0_pp models in query and sentence generation modes.

- Generate, filter, and structure the data generated by GPT-3 and T0_pp.

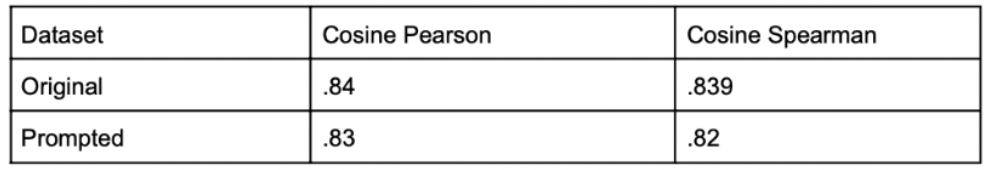

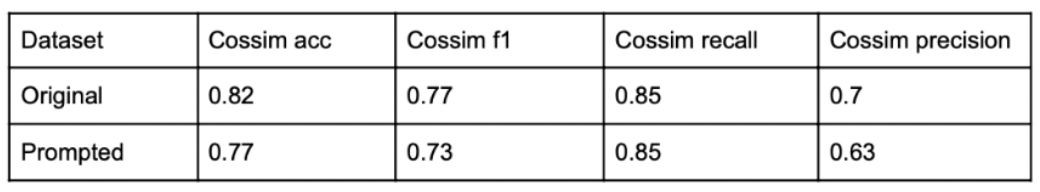

- Using test dataset from GLUE benchmarks, evaluated generated dataset against the baseline generated in step 2.

Results

1. MNLI had good results

example from generated dataset:

example from generated dataset:

Premise: She asks if the man in the bar was talking to someone in a bar.

Hypothesis generated: Very funny people who work at a bar.

Label: neutral

2. QQP had good results

example from generated dataset:

example from generated dataset:

Generated text: What is the name of the fictional country in the Harry Potter stories?

Generated text 2: Where is Harry Potter located?

3. COLA

I was not able to generate a dataset that had similar distribution as the CoLA dataset. Manually created datasets with syntactical errors did not belong to the original benchmark distribution from the evaluation report.